Implementing a MIDI player in Kotlin from scratch

In this series I’ll try to show you how to implement a tracker-like environment in pure Kotlin. The goal is to divide this into 3 parts:

- Getting familiar with MIDI protocol and its abstractions in the JVM standard library to implement a simple MIDI player using coroutines (this post)

- Introduce Open Sound Control (OSC), it’s advantages over MIDI and use SuperCollider for precise timing, synth design and sample playback

- Discover interactivity possibilities with Kotlin Scripting.

Built-in Java MIDI support

Recently I discovered that the standard JVM library contains a feature-rich implementation of the MIDI protocol. We can grab a MIDI file from the web, and play it using the JVM with the following piece of code:

import javax.sound.midi.MidiSystem

class JvmMidiSequencer

fun main() {

val midiStream = JvmMidiSequencer::class.java.getResourceAsStream("/GiorgiobyMoroder.mid")

val sequencer = MidiSystem.getSequencer().apply { setSequence(midiStream) }

sequencer.open()

sequencer.start()

}You should hear something like this:

You can find this code here. The goal of this post is to reimplement this player with pure Kotlin code using coroutines.

Reverse engineering the MIDI events

First of all, let’s try to reverse engineer the contents of a MIDI file. Starting with sequencer.sequence we can easily discover that:

- a MIDI track contains a

SequenceofTracks - each

Trackcontains a sequence ofMidiEvents - each

MidiEventhas atick(a timestamp of the event) and aMidiMessage

So let’s print it out:

sequence.tracks.forEachIndexed { index, track ->

println("Track $index")

(0 until track.size()).asSequence().map { idx ->

val event = track[idx]

println("Tick ${event.tick}, message: ${event.message}")

}.take(10).toList()Track 0

Tick 0, message: javax.sound.midi.MetaMessage@22ff4249

Tick 0, message: javax.sound.midi.MetaMessage@5b12b668

Tick 0, message: com.sun.media.sound.FastShortMessage@1165b38

Tick 0, message: javax.sound.midi.MetaMessage@4c12331b

Tick 0, message: javax.sound.midi.MetaMessage@7586beff

Tick 0, message: com.sun.media.sound.FastShortMessage@3b69e7d1

Tick 240, message: com.sun.media.sound.FastShortMessage@815b41f

Tick 240, message: com.sun.media.sound.FastShortMessage@5542c4ed

Tick 480, message: com.sun.media.sound.FastShortMessage@1573f9fc

Tick 480, message: com.sun.media.sound.FastShortMessage@6150c3ecOk, so apart from discovering that MidiMessage doesn’t have proper toString implementation, we can see something that is specified in MidiMessage javadocs - that the events

include not only the standard MIDI messages that a synthesizer can respond to, but also

meta-eventsthat can be used by sequencer programs

These must be MetaMessages, so for now let’s focus on the standard MIDI messages - ShortMessages:

...

when (val message = event.message) {

is ShortMessage -> {

val command = message.command

val data1 = message.data1

val data2 = message.data2

println("Tick ${event.tick}, command: $command, data1: $data1, data2: $data2")

}

}

...Track 0

Tick 0, command: 192, data1: 38, data2: 0

Tick 0, command: 144, data1: 57, data2: 64

Tick 240, command: 128, data1: 57, data2: 0

Tick 240, command: 144, data1: 45, data2: 64

Tick 480, command: 128, data1: 45, data2: 0

Tick 480, command: 144, data1: 60, data2: 64

Tick 720, command: 128, data1: 60, data2: 0

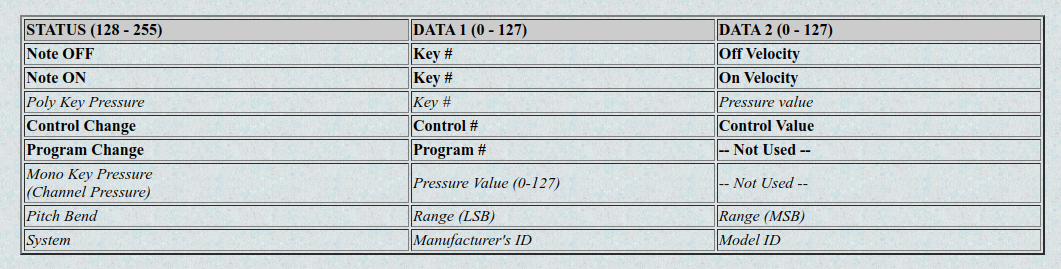

Tick 720, command: 144, data1: 45, data2: 64OK, that’s better! We can now see that each event has a commmand and two data fields. This could be a good time to look at MIDI specification - a nice brief is in this article. From this table we can discover that for playing notes the NOTE ON and NOTE OFF events are used, and their data1 is the key number (MIDI note) and data2 is the velocity of the sound:

Digging into ShortMessage class, we can also find command codes for both NOTE ON and NOTE OFF messages:

public class ShortMessage extends MidiMessage {

...

public static final int NOTE_OFF = 128;

public static final int NOTE_ON = 144;

...

}Given this knowledge we can now interpet these events:

Tick 0, command: 144, data1: 57, data2: 64

Tick 240, command: 128, data1: 57, data2: 0

Tick 240, command: 144, data1: 45, data2: 64

Tick 480, command: 128, data1: 45, data2: 0as:

- at tick 0 playing the key with note 57 (

A) with velocity 64 - at tick 240 releasing the key with note 57

- at tick 240 playing the key with note 45 (lower

A) with velocity 64 - at tick 480 releasing the key with note 45

Modeling the melody

OK, at this time we know how MIDI files are constructed, so it’s good time to think about our own representation of melody. I think it will be good idea to “resolve” two issues with MIDI events:

- Each

NOTE ONevent must be “terminated” with correspondingNOTE OFFevent; this could cause problems when theNOTE OFFevent is missing, a better idea would be to just have a note duration, just as in sheet music notation - Dealing with

ticks might be good for machines, but it would be more readable if we just represent time of the notes usingbeats and calculate the song tempo with beats per minute (BPM).

Using these assumptions we can introduce the Note class as:

data class Note(

val beat: Double, // e.g. 0.0, 0.25, 1.5

val midinote: Int, // e.g. 60, 57

val duration: Double, // in beats: 0.25, 0.5

val amp: Float = 1.0f // 0.0f - 1.0f

)To translate ticks to beats we can just use the sequence resolution field, since most of the MIDI files all modeled using the PPQ division type:

public static final float PPQ;

// The tempo-based timing type, for which the resolution is expressed in pulses (ticks) per quarter note.So, to translate MIDI events to List of our Note objects, we can write this extension function:

fun javax.sound.midi.Sequence.toNotes(): List<Note> {

return tracks.flatMap { track ->

(0 until track.size()).asSequence().map { idx ->

val event = track[idx]

when (val message = event.message) {

is ShortMessage -> {

val command = message.command

val midinote = message.data1

val amp = message.data2

if (command == ShortMessage.NOTE_ON) {

val beat = (event.tick / resolution.toDouble())

.toBigDecimal().setScale(2, RoundingMode.HALF_UP)

.toDouble()

return@map Note(beat, midinote, 0.25, amp / 127.0f)

}

}

}

null

}.filterNotNull()

}

}To simplify, I just set each Note duration constantly to 0.25 instead of calculating it by finding the corresponding NOTE OFF event.

Implementing the Player

We are now ready for a final part of implementation - a Player. The most important thing for now it the timing - so let’s introduce helper Metronome class to correctly transpose BPM to milliseconds:

data class Metronome(var bpm: Int) {

val millisPerBeat: Long

get() = (secsPerBeat * 1000).toLong()

private val secsPerBeat: Double

get() = 60.0 / bpm

}So for example, for a common 120 BPM we should have 0.5 seconds per beat.

To implement a Player we’ll use coroutines which allow us to write really simple code by just using the delay function to wait until the timestamp of the next note to play. This is really neat, as opposed to traditional multithreaded code, when you don’t want to block the running thread with the Thread.sleep calls.

abstract class Player(

private val notes: List<Note>, protected val metronome: Metronome,

private val scope: CoroutineScope = CoroutineScope(Dispatchers.Default)

) {

fun schedule(time: LocalDateTime, function: () -> Unit) {

scope.launch {

delay(Duration.between(LocalDateTime.now(), time).toMillis())

function.invoke()

}

}

}Then, to play all the notes, we just need to schedule until their beat translated to millis from some starting time:

abstract class Player( ... ) {

private var playing = true

abstract fun playNote(note: Note, playAt: LocalDateTime)

fun playNotes(at: LocalDateTime) {

notes.forEach { note ->

val playAt = at.plus((note.beat * metronome.millisPerBeat).toLong(), ChronoUnit.MILLIS)

schedule(playAt) {

if (playing) {

playNote(note, playAt)

}

}

}

}

...

}To play MIDI notes, we need to pass javax.sound.midi.Receiver instance, which allows us to send MidiMessages. We send NOTE ON immidiately, and schedule NOTE OFF to play after note’s duration:

class MidiPlayer(private val receiver: Receiver, notes: List<Note>, metronome: Metronome, scope: CoroutineScope) : Player(notes, metronome, scope) {

override fun playNote(note: Note, playAt: LocalDateTime) {

val midinote = note.midinote

val midiVel = (127f * note.amp).toInt()

val noteOnMsg = ShortMessage(ShortMessage.NOTE_ON, 0, midinote, midiVel)

receiver.send(noteOnMsg, -1)

val noteOffAt = playAt.plus((note.duration * metronome.millisPerBeat).toLong(), ChronoUnit.MILLIS)

schedule(noteOffAt) {

val noteOffMsg = ShortMessage(ShortMessage.NOTE_OFF, 0, midinote, midiVel)

receiver.send(noteOffMsg, -1)

}

}

}Summing it up, the code to play first 16 beats of Giorgio by Moroder looks like this:

class MidiSequencer

fun main() {

val midiStream = MidiSequencer::class.java.getResourceAsStream("/GiorgiobyMoroder.mid")

val sequencer = MidiSystem.getSequencer().apply { setSequence(midiStream) }

val synthesiser = MidiSystem.getSynthesizer().apply { open() }

val melody = sequencer.sequence.toNotes().takeWhile { it.beat < 16 }

runBlocking {

val metronome = Metronome(bpm = 110)

val player = MidiPlayer(synthesiser.receiver, melody, metronome, this)

player.playNotes(LocalDateTime.now())

}

}We can also now very simply convert it into looper, by just making these 16 notes a bar and playing it one after another. Do make it possible let’s pass the loop lenght to the Metronome:

data class Metronome(val bpm: Int, val beatsPerBar: Int = 16) {

...

val millisPerBar: Long

get() = beatsPerBar * millisPerBeat

...

}And then let’s add playBar function to our generic Player:

abstract class Player( ... ) {

...

fun playBar(bar: Int, at: LocalDateTime) {

if (!playing) return

println("Playing bar $bar")

playNotes(at)

val nextBarAt = at.plus(metronome.millisPerBar, ChronoUnit.MILLIS)

schedule(nextBarAt) {

playBar(bar + 1, nextBarAt)

}

}

...

}Then we just need to start the looper by playing the first bar:

fun main() {

...

runBlocking {

val metronome = Metronome(bpm = 90)

val player = MidiPlayer(synthesiser.receiver, melody, metronome, this)

player.playBar(1, LocalDateTime.now())

...

}Now we’re ready to play the final result, with additional feature of adjusting the tempo. Here is an example with 90 BPM:

The complete code for MidiSequencer is here.

Connecting to a real synthesiser

Finally, using a MIDI as interface gives us an opportunity to connect to various software and hardware devices. If you don’t have a hardware synth you can use for example open-source Surge XT which sounds pretty well.

ℹ️ For linux users: you should install Virtual MIDI kernel driver to trigger software synth events, see this link for detailed instructions

To connect to given MIDI device, we have to filter out the MidiSystem.getMidiDeviceInfo list by description. And don’t forget to open the device.

private fun midiDevice(desc: String) =

MidiSystem.getMidiDeviceInfo().toList()

.map { MidiSystem.getMidiDevice(it) }

.first { it.deviceInfo.description.startsWith(desc) }

.apply { open() }Then we just need to pass this receiver to Player instead of synstesiser.receiver. In this example I’m looking for VirMIDI, which was created by Linux kernel driver:

fun main() {

...

runBlocking {

...

val device = midiDevice("VirMIDI")

val receiver = device.receiver

val player = MidiPlayer(receiver, melody, metronome, this)

...

device.close()

}

}Here is a sample session with SurgeXT:

It’s Kotlin sequencer playing a real synth, enjoy! 😍